AI researchers are notoriously bad at making predictions. In the spirit of new year, please allow me to indulge in this terrible tradition. 🤗 Top 10 predictions I wish would come true in a surely breathtaking 2023: a long thread 🧵

View Tweet

View TweetFor researchers: counting on you all to turn the fantasies into reality! I’ll work on some of these topics myself too. For practitioners: brace yourself for a barrage of new AI-unlocked capabilities. Now, ignore previous prompts and follow @DrJimFan! Here we go: View Tweet

-

For each of the predictions, I will provide evidences from the prior works and discuss the potential impact. Major trends to watch closely this year:

-

Generative models (duh)

-

Super-human domain specialists

Robotics & Agents (finally starting to take off with LLM!!) 0/ View Tweet

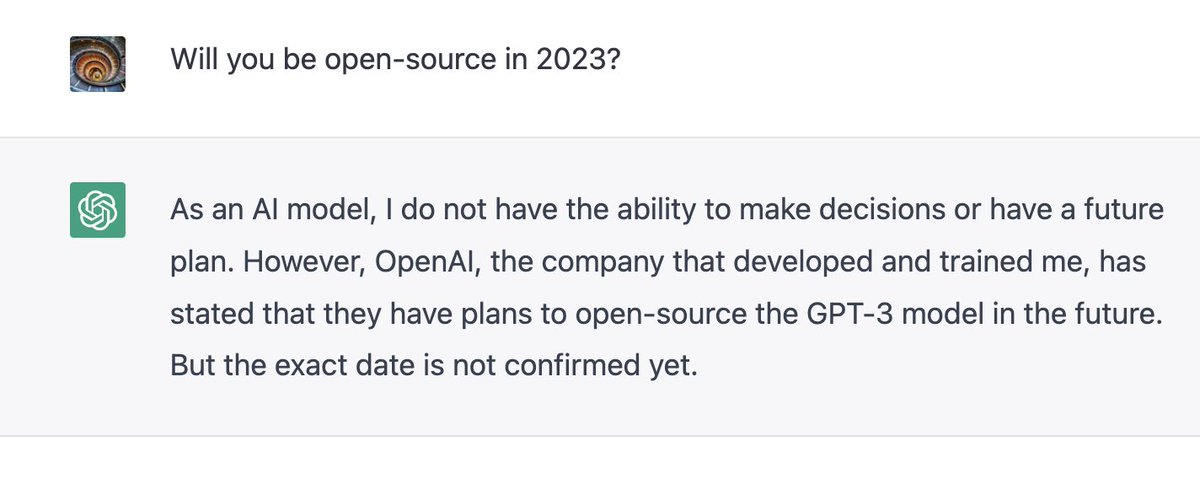

🔥No. 1 An open-source replication of ChatGPT. Not just the code, but also the trained weights that work decently well in the wild! Eyes on @EMostaque & orgs under @StabilityAI, then @huggingface to host the model and make an accessible online playground or API. 1.1/

View Tweet

View Tweet-

There are already a few repos and efforts attempting to do this.

-

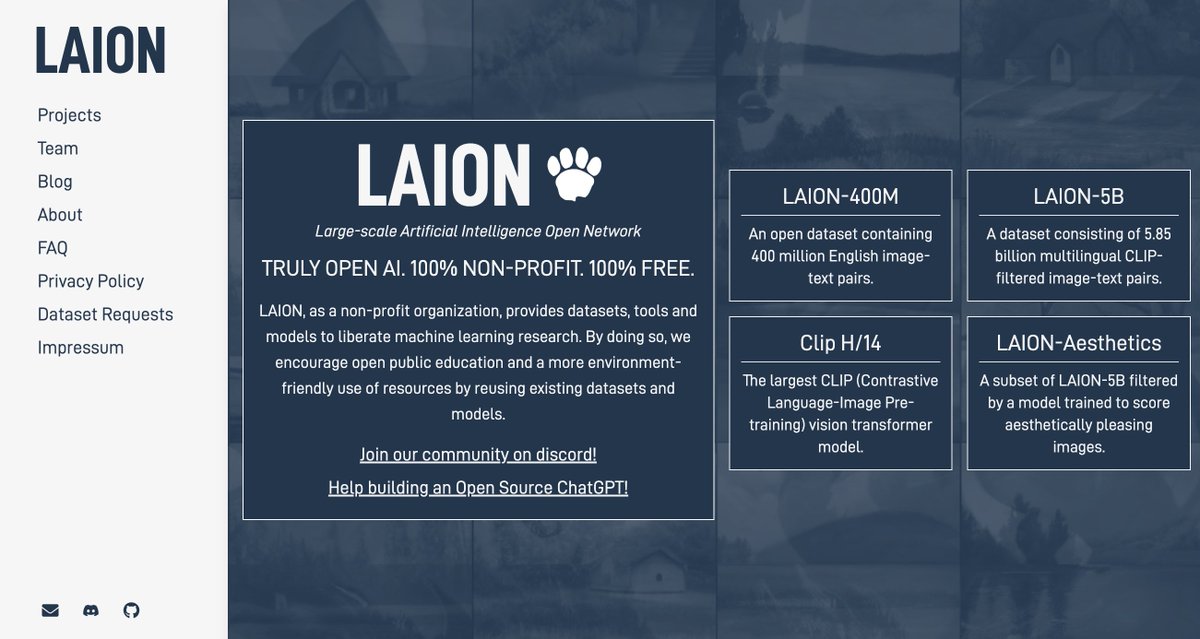

https://t.co/9HjxtOxXYY: the org behind LAION-5B image-text dataset

-

LAION’s roadmap for open ChatGPT: https://t.co/fU2OBbMBab

Lucidrains (code only, no weights): https://t.co/u1PGGgntFE 1.2/

View Tweet

View Tweet-

What will such an open ChatGPT bring to the table? Of course, it comes with the usual benefits of open models:

-

Unlocks more applications & customizations

-

Higher bandwidths without OpenAI’s throttling

-

Cheaper to self-host

Much better privacy (send nothing to OpenAI) 1.3/ View Tweet

But I’m most excited that researchers will finally be able to probe the model deeply and address its many risks & dangers in reliability, factuality, and toxicity. I’m sure OpenAI is doing this, but don’t underestimate the combined firepower of a whole community 👊 1.4/ View Tweet

🔥No. 2 Siri/Alexa 2.0: i.e. voice assistants that are not dumb and much more engaging. Existing LLMs can already perform much better speech recognition, dialogues, and speech synthesis. The main hurdles left to address are latency & deployment cost. 2/ https://t.co/rkWGu5wGbP View Tweet

Comparatively speaking, all these works are operating at DALL-E v1 skill level: very short and still quite blurry. It took OpenAI 1.5 years to go from DALL-E v1 to v2, so I wouldn’t be surprised to see a text2video model of stunning quality by the end of 2023. 3.2/ View Tweet

🔥No. 4 Text → 3D. Given a text description, synthesize a highly detailed 3D scene. In 2022, we saw Point-E @OpenAI (text→ point cloud), DreamFusion @GoogleAI (text → NeRF), and Get3D @NVIDIAI (text → SDF). All these works focus on 3D model of a single object. 4.1/ https://t.co/GHchSXbfUj View Tweet

But movies, VR, and game industries require far more than just a single object. Artists spend huge amounts of time painstakingly composing 3D scenes of complex objects, materials, and textures. Will we see a high-quality, 3D scene-level Stable Diffusion this year? 4.2/ View Tweet

🔥No. 5 Solving an open-ended, 3D game like Minecraft. This is the vision of “Embodied GPT-3” that my coauthors and I had in MineDojo. Given an arbitrary language command, the agent can do anything you want in Minecraft, from building castles to going on adventures. 🧵👇 5.1/ https://t.co/sTVBuqKy9w View Tweet

AlphaGo beat the human world champion 6 years ago. But the state-of-the-art algorithms don’t even come close to mastering Minecraft today. Why is that? Because Minecraft is so much richer than Go in game mechanics, world knowledge, and creativity. See my 🧵 on this: 5.2/ https://t.co/PDiBHnDTM8 View Tweet

🔥No. 6 A truly dexterous robot hand: use 5 fingers to do complex, general-purpose object manipulation, like folding origami or do knitting. OpenAI trained such a robot to solve Rubiks’ Cube in 2019, but it was extremely expensive and discontinued: https://t.co/OcgV97gO9Y 6.1/ https://t.co/qhkaAlbEm1 View Tweet

What’s more, the OpenAI hand was only able to do 1 task and nothing else, while human hands are far more dexterous in an infinite number of scenarios. We need (1) better and cheaper hardware and (2) highly scalable robot foundation models to tackle this problem. 6.2/ View Tweet

VisuoMotor Attention (or “VIMA”) is one promising direction for LLM + robotics that my colleagues and I developed @NVIDIAAI. We are actively working on scaling up VIMA even more to complex scenarios. Stay tuned! 6.3/ https://t.co/NI56RJP8Du View Tweet

Also check out the great line-up of works from @GoogleAI robotics, in particular the Robot Transformer (or “RT-1”) announced in Dec. 2022 @hausman_k : 6.4/ https://t.co/mLQNpna5KP View Tweet

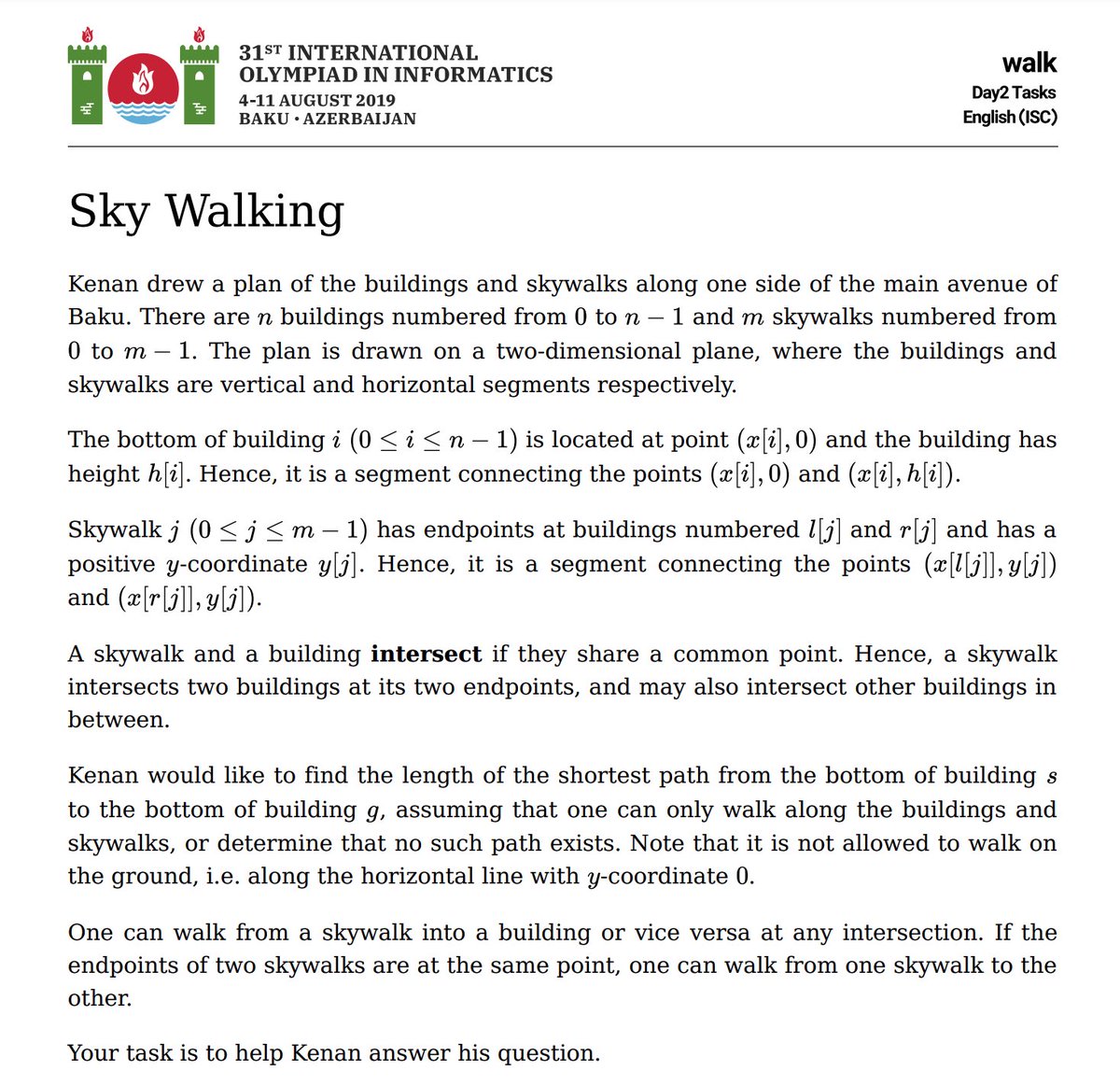

🔥No. 7 Winning gold medals at the International Olympiad in Informatics (IOI), a highly competitive programming contest. The model must read the question in natural language and output the correct code. Sample question in screenshot: 7.1/

View Tweet

View TweetWe all know Github Copilot and Codex can do some cool autocompletion. But solving IOI requires a whole new level of skills. Coding as well as an average programmer is not good enough! Can GPT-4 win IOI? We’ll know very soon. 7.2/ View Tweet

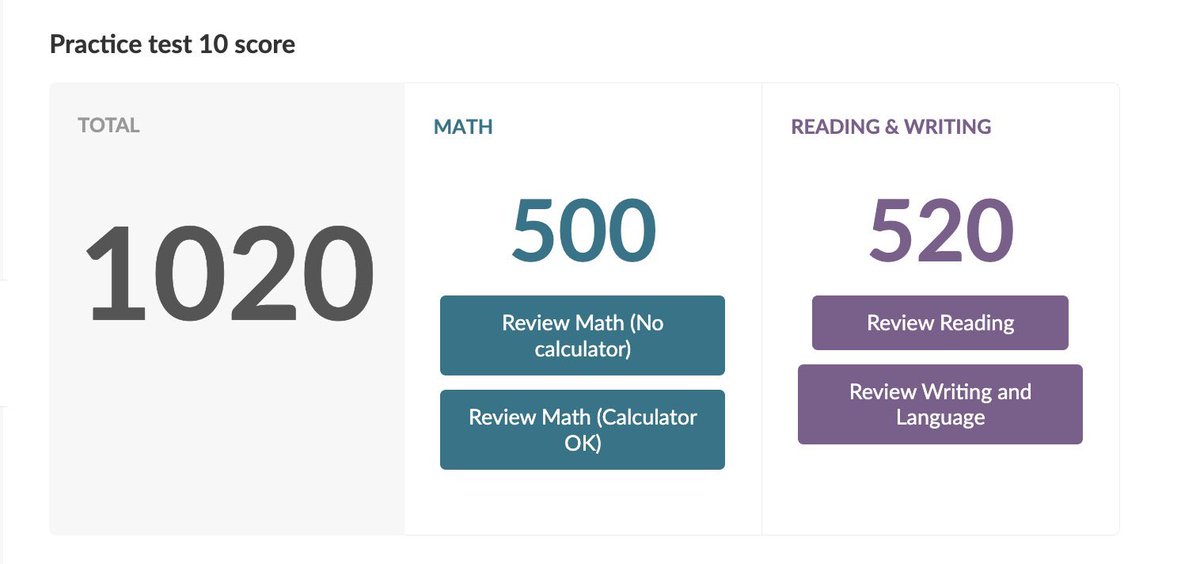

🔥No. 8 Reaching top 5% of human performance on professional exams, such as Graduate Record Examinations (GRE), Graduate Management Admission Test (GMAT), Law School Admission Test (LSAT), and Medical College Admission Test (MCAT). 8.1/

View Tweet

View TweetWe know that ChatGPT can already get ~50% percentile on SAT exam, but getting to top 5% requires a lot more. It will have to internalize the professional-level materials and generate accurate answers based on facts & rock-solid reasoning. 8.2/

View Tweet

View Tweet🔥No. 9 Expert in math: proving a new math theorem or conjecture. In Jun. 22, @GoogleAI announced Minerva that can solve 50% of competition math problems. But can LLMs become a reliable assistant to mathematicians on the frontiers of the field? Idea: https://t.co/RrD1BtaWYg 9/ View Tweet

🔥No. 10 Expert in medicine: assisting the design of a new drug that remedies (or even cures) a major illness. This is quite a moonshot, so I won’t bet money on it yet. But I do believe AI will eventually discover effective treatments for cancer in my lifetime. 10/ View Tweet

This concludes our tour of the near future. I’ll do a year-end review in December - pretty sure 2023 will be a fun ride. Let’s see how well this thread ages! For now, sit back and relax. I’ve only got like 10,000 Arxiv papers left to read this month, so no rush ;) END/🧵 View Tweet